In Neural Networks, “delta” means the error signal, which is the difference between what the network produced and what it should have produced. For each training example, the network checks its output against the known target and calculates this difference. Delta shows how far the model’s prediction is from the correct answer. This error signal helps guide learning.

For example, when teaching a child to ride a bike, each wobble or fall indicates how to correct their balance. Similarly, the delta in a neural network acts as that feedback, helping adjust the model’s weights with each mistake.

For a simple single-layer network, like a perceptron, the delta rule helps update weights easily. The network has a target output, called t, and an actual output, called y. The difference between these outputs is called the delta, which is t minus y. The classic delta rule, also known as the perceptron learning rule, changes each weight by using this error (the delta), the input, and a small learning rate. In formula terms, this is written as

Δw = η(t – y)x.

To explain it, if the output is too low (a negative delta), we increase the weight; if the output is too high (a positive delta), we decrease the weight. As noted in MIT lecture slides, the delta is literally the difference between the desired output and the actual output. The goal is to learn from mistakes: the bigger the difference, the bigger the adjustment needed.

Delta in Multi-Layer Networks (Backpropagation)

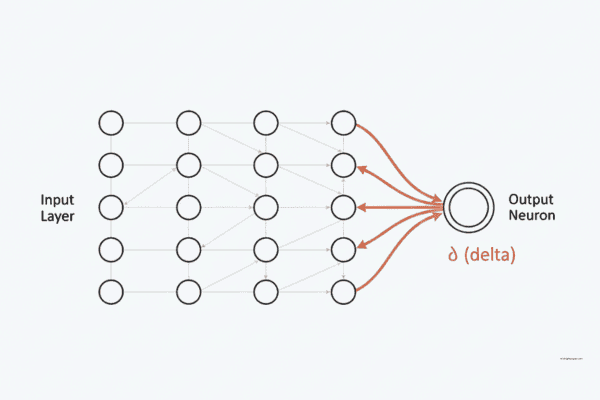

When we use multi-layer neural networks, the same idea applies, but the calculations become more complex. The generalised delta rule, which is the backpropagation algorithm, calculates an error (delta) for each neuron. In the output layer, the error comes from the difference between the target value and the actual output. For hidden layers, the error is calculated by taking the errors from the layer above and passing them backwards.

Training follows a specific order: first, we do a forward pass to compute all outputs. Next, we compute the errors and then perform a backward pass. During this step, the network uses the error from each neuron to update the weights. As one machine learning guide explains, backpropagation is a key method used to train neural networks by reducing the difference between predicted and actual outputs.

This rule is meant to adjust all the weights through gradient descent on the loss. It is a way of applying the simple delta rule to deeper networks by sending the output errors backwards through each layer.

The algorithm works in steps. First, it calculates all outputs with a forward pass. Next, it finds the error at the output nodes by subtracting the output from the target. Then, it moves backwards through each layer. For every hidden neuron, it calculates the delta by using the derivative of its activation function times the weighted sum of the deltas from the next layer.

This way, each hidden delta shows how much that neuron contributed to the errors above it. According to DeepAI’s glossary, the generalised delta rule (backprop) aims to reduce the error in a neural network’s output through gradient descent. The algorithm repeats this forward and backward process many times, gradually bringing all deltas closer to zero to improve prediction accuracy.

Learn More: Difference Between Machine Learning (ML), Artificial Intelligence (AI), and Deep Learning(DL)- 2025.

Calculating Delta in Practice

To calculate the error for a sigmoid output unit in a neural network, first find the delta at the output neuron.

delta output = output * (1 – output) * (target – output)

The formula is based on finding the change in the net input. For instance, if a neuron outputs 0.61 while the target is 0.50, then we calculate its delta as 0.61 multiplied by (1 minus 0.61) and then multiplied by (0.50 minus 0.61). In a practical example from GeeksforGeeks, an output neuron with a value of 0.67 and a target of 0.50 has a delta of roughly -0.0376, calculated as 0.67 times (1 minus 0.67) times (0.50 minus 0.67). Hidden neurons similarly calculate their delta, but they use the deltas from the next layer.

Specifically, to find a hidden delta, we multiply the neuron’s output by (1 minus its output) and then by the sum of its outgoing weights multiplied by the delta of each neuron in the layer above.

delta hidden = output_hidden * (1 – output_hidden) * Σ(weight_to_next_layer * delta_next_layer).

In the same example, one hidden neuron had a value of δ_3=0.56*(1-0.56)*(0.3*-0.0376), which is about −0.0027. This shows that if a hidden unit’s output differs significantly from what it should have contributed, its delta will be larger. This larger delta leads to bigger adjustments.

Figure: A simple neural network has 2 inputs, 2 hidden units, and 1 output after one forward pass. With inputs of 0.35 and 0.70, the hidden neurons produce outputs of 0.57 and 0.56, and the final output is 0.61. We calculate the error (delta) by comparing this output to the target, which could be 0.50. We then use this error to update the weights during training.

Learn More: What Are the Best AI Tools for Content Creation in 2025?

Adjusting Weights Using Delta

After we calculate the deltas for the output and hidden units, we adjust each weight based on the delta and the input that created it. The weight update rule is:

Δwᵢⱼ = η × δⱼ × xᵢ

In this formula, η is the learning rate, δ_j is the error term (delta) for neuron j, and x_i is the output of neuron i that connects to j. According to GeeksforGeeks, “δ_j is the error term for each unit.” This means that each weight change depends on the delta; a larger error signal leads to a larger adjustment. Over many iterations, these updates gradually reduce the overall error.

Eventually, the deltas become smaller as the network’s predictions approach the targets. Delta drives the gradient descent process, helping the model find weight values that minimise errors. As noted by DeepAI, backpropagation can “efficiently propagate errors backwards through the network and adjust weights accordingly.”

Delta in Various Network Architectures

The concept of a delta as an error term is important for all kinds of neural networks. This includes simple feedforward networks, deep convolutional networks used for image recognition, and recurrent networks meant for analysing time-series data. Training these networks involves calculating errors in the output and sending these errors back through the network. Even convolutional and recurrent layers use backpropagation or similar methods, meaning each filter or time-step neuron has a delta.

For example, DeepAI states that the generalised delta rule is used for feedforward networks that handle tasks like image and speech recognition, including modern CNNs. In all cases, the network compares its output to the desired target, calculates a delta (or error) for each output neuron, and then works backwards. While the structure of the network may alter how activations are calculated, the core idea remains the same: delta signals are crucial for updating weights and learning.

Conclusion

In simple terms, delta in neural networks refers to the error signal used for learning. It measures the difference between what the network produced and what it was supposed to produce, and it is often adjusted by the slope of the activation function.

During training, each neuron’s delta indicates “how wrong” it was. The network uses these deltas to change its weights through techniques like the delta rule or backpropagation, helping it improve its predictions. With more training, the deltas become smaller as the model learns to align its outputs with the targets. By continuously calculating deltas and updating weights, neural networks learn from their mistakes. This method of learning based on errors is fundamental to training neural networks of all kinds.

To learn more such facts in a very easy way and to keep yourself connected to this modern world, follow us for weekly updates, tips, and future-ready strategies.